This side quest is due by Sunday, December 10, 2023 11:59pm to earn the achievement.

Part I - Raytracing

For this Side Quest, you will write a basic raytracer and implement some of the functionality that raytracers enable you as a programmer to add with relative ease. You will start the assignment with base code, and will implement a number of elements of functionality:

- the ability to calculate a camera ray for a given pixel

- the ability to intersect rays against spheres and planes

- Phong reflectance to shade the point that gets hit by a camera ray

- the addition of a shadow ray cast at each intersection point to implement hard shadows

- the addition of a reflection ray to simulate shiny objects

Your final product will be able to support multiple objects, multiple lights, and varying material properties, all of which can be read in from file.

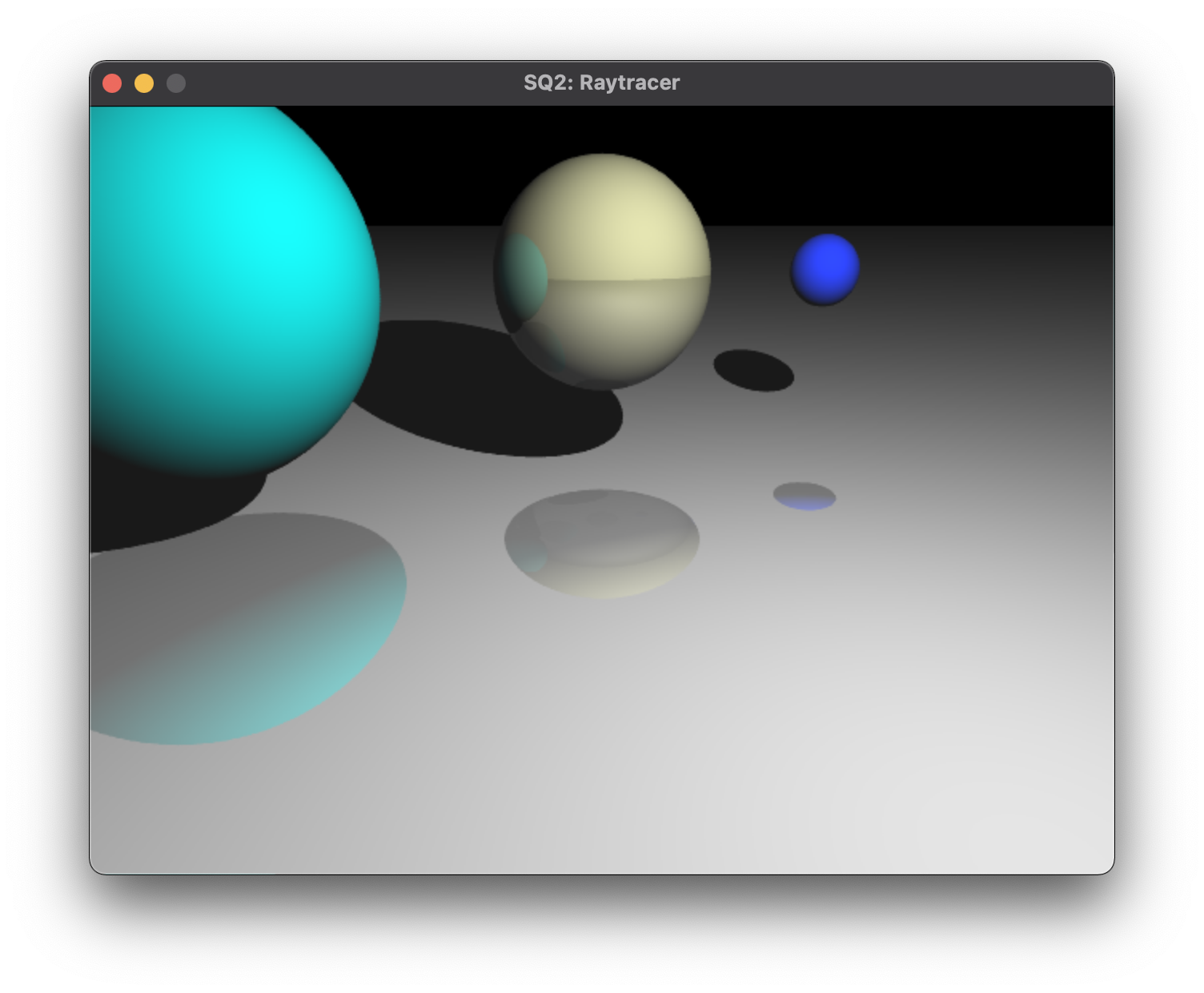

A rendered frame from the raytracer.

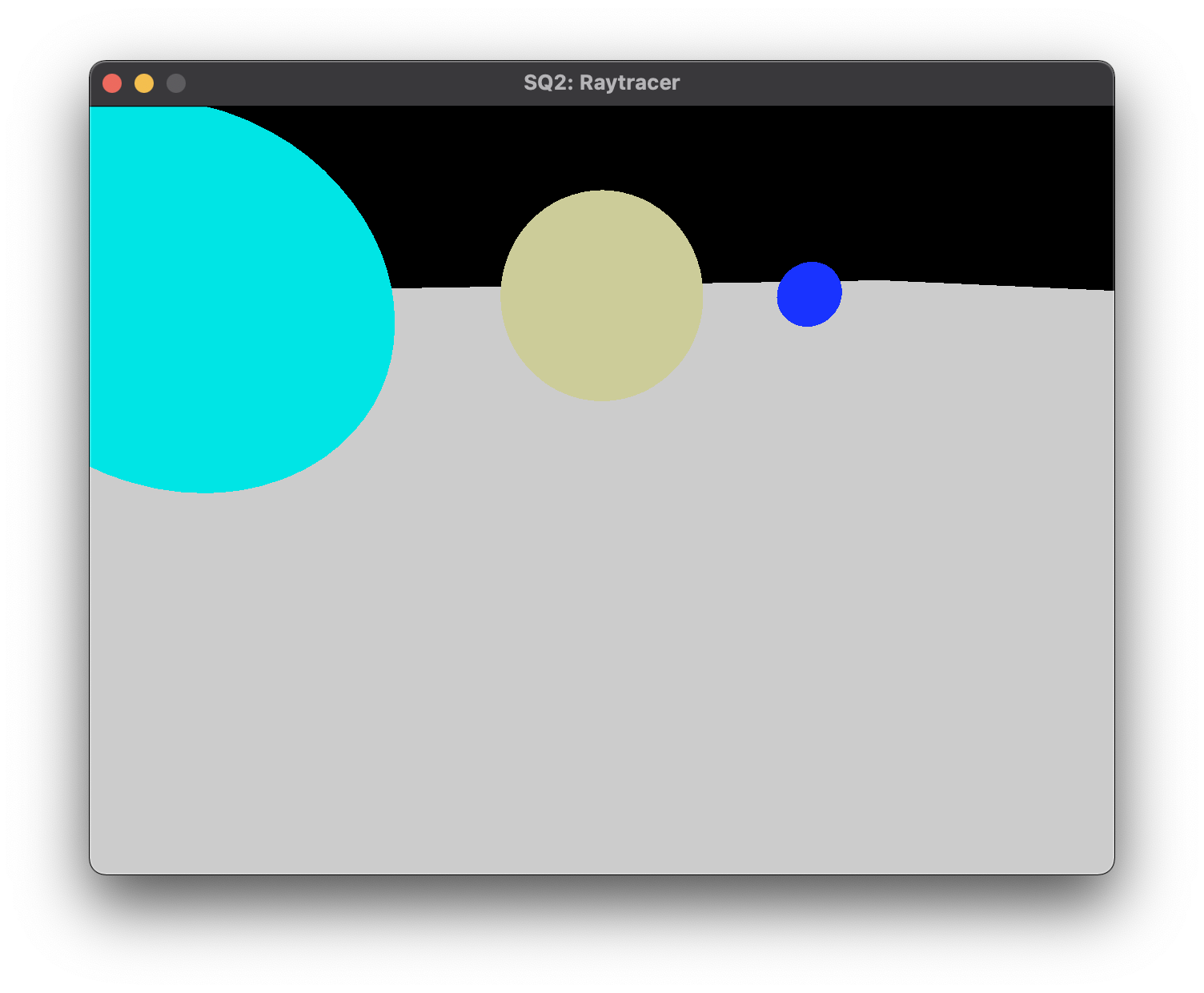

The base code that you are given is a basic OpenGL program that can load spheres and planes in from file and

display them with OpenGL in a "preview mode"; the user can navigate around the scene using a camera that follows

the arcball-camera movement paradigm, and the objects drawn in the OpenGL preview accurately reflect the object data

that is read in from file. When the user presses the 'r' key, a callback is triggered that will perform the

raytracing code. This callback takes the list of objects and lights in the scene, as well as the camera object, and

generates, as its output, a framebuffer that is the size of the window when it was triggered, containing the final image.

That image is then attached to a texture, which is rendered over a quad covering the screen. The user can then press the 's' key

to write to a file a PPM version of the rendered image.

The previous frame, in OpenGL preview mode.

Base Code

You can download the base code this assignment here (raytracer starter code). There are a number of classes that encapsulate the work

that needs to be done in an OpenGL pipeline; familiar to you should be the ReflectiveMaterial,

and PointLight classes, which are all very similar to the classes you have used or created for previous or upcoming homework assignments.

There are a few new classes that are also particularly relevant to this assignment. They are:

Ray

This class is a storage class for rays: it holds two values, representing an origin and a direction.

Shape

This is an abstract class that allows us to lump all of our renderable objects into the same vector inside of main.cpp.

Instead of having separate arrays for Spheres, Planes, Triangles, Meshes, etc., all of those classes

extend the Shape class and can be used interchangeably. (i.e. regardless of which object type it is call shapes[i]->drawViaOpenGL();) All

classes that inherit from the Shape class must implement the drawViaOpenGL() function, which draws the object using OpenGL calls,

and the intersectWithRay() function, which computes the intersection between a ray and the object and returns information about

the intersection, if it occurred.

Sphere

Has a position (center of the sphere) and a radius. Already contains an implemented drawViaOpenGL() function so that it

can be used in Preview Mode. You will need to implement its intersectWithRay() function.

Plane

Defined by a point on the plane and the normal of the plane. Already contains an implemented drawViaOpenGL() function, but

note that it draws the plane as a finite, bounded quad (in reality, the plane extends infinitely). You will need to implement its intersectWithRay() function.

IntersectionData

This is a storage class to hold information about an intersection, if one occurs. It is returned from the intersectWithRay()

functions: if the ray passed into that function intersected that object, then the wasValidIntersection boolean member variable should be set.

If this intersection was valid, then its member variables will hold a) the point of intersection, b) the surface normal of the object at the point of intersection,

and c) a copy of the shape's Material object. Your intersection functions must fill these member variables.

Places where you need to write code are indicated with TODOs. Pressing 'r' calls a raytraceImage() function which is

located in Raytracer.cpp. This function needs to fill each pixel with a color based on the scene.

Camera Rays

The first step of your raytracer is computing the camera ray (primary ray) for each pixel of an image. For more information on computing primary rays, see this website. Note that thanks to our OpenGL formulation, we have the vertical field of view and must compute the horizontal field of view.

You may find it easier to think of the terms involved in camera ray computation as a vector math problem. If we assume that the image plane is a unit distance away from the camera, we can get a point in the center of the image plane by adding the camera's position to its direction. We can compute the width and height of the image plane using the tangent functions as described in the link above. Lastly, we can determine the vectors that lie in the image plane -- the first is the camera's local X axis, perpendicular to the viewing direction and global up vector (0,1,0), and the second is the camera's local Y axis, perpendicular to its local X axis and local Z axis (direction) -- and move along these vectors based on the percentage of the image plane's width and height.

Intersection Testing and Shading

After being able to calculate the camera ray through each pixel, you need to intersect that ray against all objects in the scene and

save the nearest intersection, if any. This can be done by iterating over each shape and calling its intersectWithRay() function -- but

you will need to implement these functions for the Sphere and Plane classes. Start with Plane and move on to

Sphere after that is confirmed to work.

Once you know a ray has intersected with an object, and your intersectWithRay() function has appropriately filled in the intersection point,

surface normal, and material in the IntersectionData object, you must compute a final color for the pixel. You must do this using

the Phong Reflectance Model as discussed in class. Basically, shading a pixel requires iterating over all lights and

summing the contribution from each light.

Shadows and Reflections (& Refractions)

Before computing the diffuse and specular contributions from a light, you must determine whether that point is in shadow. To do this, you need to test a ray for intersection against the scene whose origin is the intersection point and whose direction points toward that light. (NOTE: to prevent rounding errors, move the intersection point a small amount along the direction vector before doing testing.) If there are any intersections, the point should receive only the ambient component of the lighting equation.

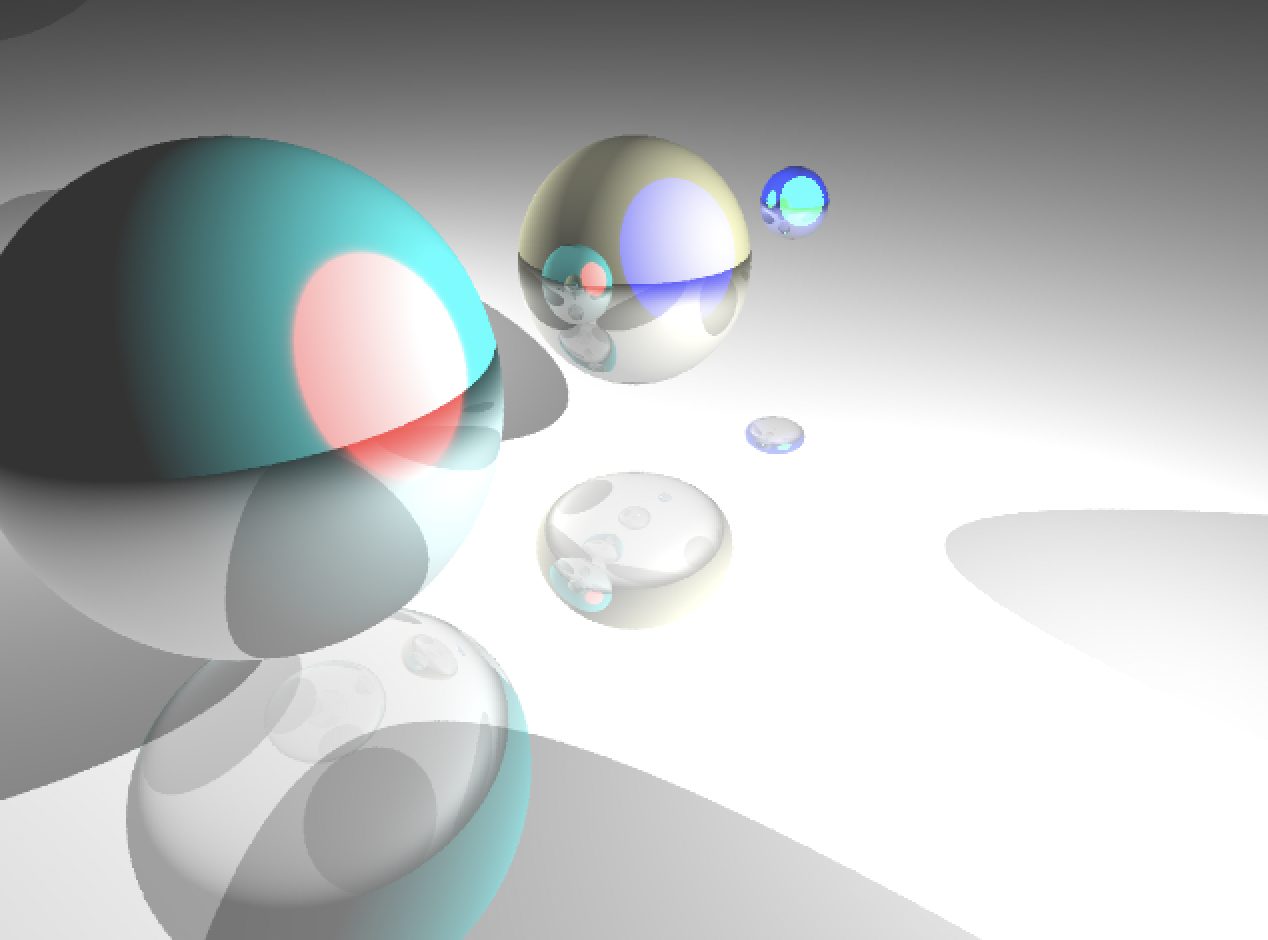

Lastly, you will notice that the Material class has one extra parameter -- reflectivity. When shading a point, if the reflectivity of

an object is greater than zero, you must cast a reflection ray to determine the reflected color. NOTE: as with

shadow rays applies: you should move the intersection point a small amount along the reflection vector to avoid rounding errors. After finding

the reflected color, you lerp the final color of the point as reflectivity * reflectedColor + (1 - reflectivity)*phongColor.

Reflective spheres (note: this scene has two lights)

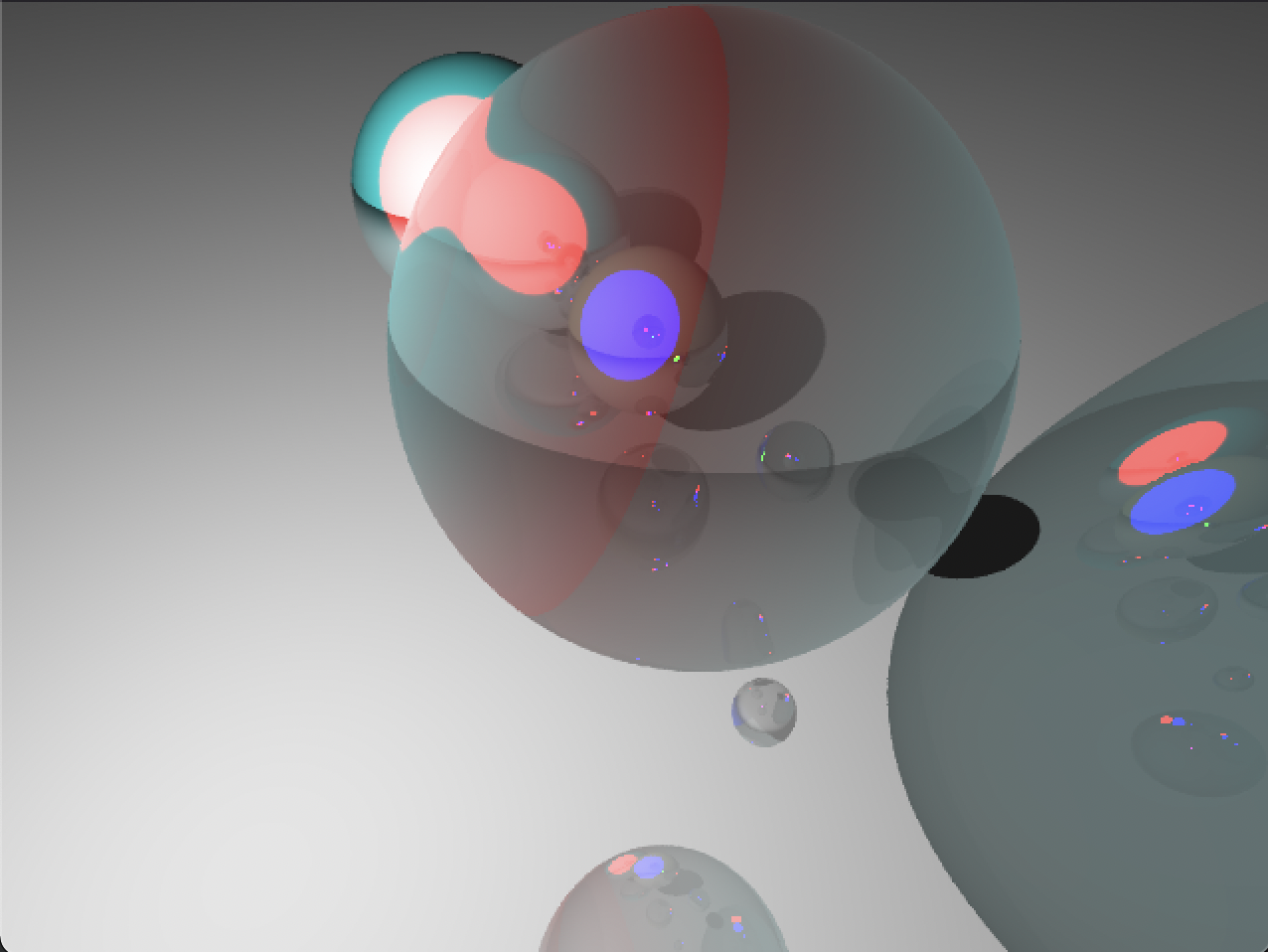

Additionally, the Material class supports transparency and refraction. Only reflection is required but you are encouraged to add in a transparent & refractive implementation as well.

A refractive sphere (note: the sphere and shadow reflect the transparency)

Structuring Your Code

Since the operations involved with determining shadows and reflection are recursive, it makes sense to write functions to support this. For instance,

use the function called intersectRayAgainstScene() that intersects a ray against all objects and returns the closest intersection, if any.

This will keep your raytraceImage() function much cleaner. Additionally, you have a second function, shadeIntersection(),

that computes the color for a given intersection point and returns that. It makes sense to put this as its own function, since you will have to

handle shadow rays (use the intersectRayAgainstScene() function) and reflection rays (intersectRayAgainstScene() with a reflection

ray and then recursively call shadeIntersection() to determine the reflected color). For shadow rays, you may want to write a version of

intersectRayAgainstScene() that returns true as soon as possible if it intersects any object, and doesn't bother computing the nearest

intersection, for speed purposes. Additionally, you may want to clamp your color value before returning it, in case

it lies outside the range of [0,1].

scene.txt File Format

The scene.txt file format is outlined below. There are four different types of lines that are accepted as input. First, a material

must be specified that will be applied to all the following shapes. Any number of spheres and planes may be specified. Lastly, at least one light

must be specified, but any number can be accepted.

material diffuseR G B ambientR G B specularR G B shininess reflectance indexOfRefraction alpha

sphere x y z radius

plane x y z normalX Y Z

light x y z diffuseR G B ambientR G B specularR G B

The material has the diffuse, ambient, and specular colors specified along with the shininess. The reflectance value states how reflective the material is. You can use this value to lerp between the object's material and the reflected ray's material. A value of 0.0 is no reflectance where as a value of 1.0 is full reflectance (the perfect reflector we alluded to).

The sphere is represented by some (x, y, z) point in space with a radius r.

The plane is represented by point-normal form. A point in the plane is (x, y, z) with surface normal (X, Y, Z).

The light is positioned at (x, y, z) with diffuse, ambient, and specular colors.

Take a look at the included scene.txt file for how these are used in conjunction. Feel free to create new scene files experimenting with different

positions, materials, reflective objects, etc.

Part II - Website

Update the webpage that you submitted with FP to include an entry for this assignment. As usual, include a screenshot (or two) and a brief description of the program, intended to showcase what your program does to people who are not familiar with the assignment.

Grading Rubric

To earn the achievement, your program must meet the requirements stated above.

In addition: In order to earn the achievement, this side quest must be submitted prior to Sunday, December 10, 2023 11:59pm. Late submissions will be accepted and added to the student's page, but the achievement will not be awarded for a late submission.

Experience Gained & Available Achievements

Web +50 XP

|

Dr. Ray Stantz

|

Submission

When you are completed with the assignment, zip together your source code. Name the zip file, HeroName_SQ2.zip.

Upload this file to Canvas under SQ2.

This side quest is due by Sunday, December 10, 2023 11:59pm to earn the achievement.